Explaining Who Owns Your Bot? IP and Identity In (Decentralized) AI Companions

It sounds like a sci-fi dilemma: you spend months training a digital companion that remembers your preferences, adapts to your moods, and even develops a recognizable “personality.” Then you ask a simple question: Who actually owns this thing?

In 2025, as AI companions go mainstream, this question has become more than philosophical. From generative text chatbots to emotionally responsive avatars, ownership and intellectual property (IP) rights are murky, and even seasoned legal experts can’t always agree. Factor in decentralized AI architectures, where no single entity fully controls the infrastructure, and the confusion grows deeper.

This piece unpacks the current landscape of AI companion IP, how decentralization complicates the picture, and why clear frameworks will be vital for developers and users alike.

Why Does it Matter?

Knowing who owns your AI companion isn’t just a legal technicality, it’s a foundational question about trust and continuity. Imagine pouring hundreds of hours into refining an AI confidant, only to lose access when a subscription lapses or the provider dissolves.

This isn’t hypothetical; some platforms have faced user backlash after abrupt changes in service terms. When your interactions shape a unique persona, you naturally expect a stake in that co-creation.

Consider an example: a small business owner trains an AI to act as a brand voice, answering customer inquiries, writing marketing copy, and learning tone over time. If the hosting platform changes its policies or raises fees dramatically, the business could be locked out of a bot that essentially embodies its public identity. This is more than inconvenient; it can be existential for a brand built on consistent engagement.

For personal use, the stakes are different but no less important. An AI that becomes a daily companion can feel like a part of your inner life. Losing it unexpectedly can be jarring, even traumatic. That’s why clear frameworks, whether through smart contracts or transparent licensing, are essential to ensure users aren’t blindsided by sudden revocations of access or changes in rights.

Ultimately, the question of who owns your bot is a proxy for a deeper issue: whether we see AI companions as mere utilities or as relationships worth protecting.

As this technology matures, clarity and fairness will be what transforms early adopters’ enthusiasm into long-term trust.

The Basic Layers of AI Companion Ownership

When you engage with an AI companion, be it a voice interface, a text-based friend, or a virtual petthere are typically three components at play:

1. Model Weights and Architecture: These are the mathematical parameters and neural networks that define the bot’s core capabilities. For example, if your companion uses OpenAI’s GPT-4, the underlying model weights remain the property of OpenAI, licensed to you under specific terms.

2. Training Data and Fine-Tuning: If you fine-tune the model on your own data (chats, mood logs, or preferences), you may retain rights over that dataset. However, the model parameters adapted from that data often remain subject to the base model’s license.

3. Outputs and Interactions: The content the bot generates, like text conversations or images, can fall into different IP categories. Depending on jurisdiction, outputs may or may not be copyrightable, and ownership can be split among users, platform operators, or the underlying model creator. The tension between user customization and platform control is why you rarely “own” a bot outright. You have usage rights, maybe some data rights, but seldom full control.

The Special Case of Decentralized AI Decentralized AI frameworks, such as those developed by Gensyn and io.net, introduce another layer of complexity. These systems distribute the compute and storage of AI models across a global network of contributors. Rather than a single cloud provider like AWS or Azure hosting your companion’s neural net, hundreds or thousands of nodes collaborate to run inference.

Key implications:

· No Single Custodian: Because the compute is decentralized, no one entity fully controls the runtime of your bot. This makes enforcement of usage restrictions harder and can complicate data protection compliance (like GDPR).

· Shared Infrastructure: Contributors to decentralized compute networks often have different licensing expectations. A node operator might supply GPU time without agreeing to commercial IP assignments, which could impact derivative works.

· Dynamic Availability: If decentralized nodes drop offline or change consensus protocols, parts of your bot’s “memory” or model state can become inaccessible. This architecture delivers resilience and cost savings, but it can muddy the question: if nobody fully hosts or controls the model, who owns the final output?

User-Generated Data and the Illusion of Ownership

Many AI platforms tout “your data, your rights.” Yet the reality can be more restrictive. For instance, Replika’s terms of service grant you a perpetual license to use your conversations but explicitly disclaim that you own the AI itself. Similarly, Character.AI reserves broad rights over usage data to improve its services.

Some developers try to balance this with user export tools. Open-source frameworks like LangChain and Rasa allow local retention of fine-tuning data and conversation logs. However, unless you also control the model weights, you can’t fully decouple your AI companion from the original platform. In practice, your ownership looks more like a bundle of partial rights:

· Access rights (you can use the model while you have an account)

· Data portability rights (you can export your inputs)

· Limited exclusivity (nobody else can impersonate your trained companion)

· License restrictions (you can’t commercialize derivative works without permission)

Copyright and AI Outputs: What the Law Actually Says

A common misconception is that AI outputs are automatically yours. In the U.S., the Copyright Office clarified in March 2023 that works generated solely by AI are not protected under copyright unless there is sufficient human authorship involved (source: U.S. Copyright Office). This means:

· Text and images created purely by an AI companion could be public domain or subject to platform-specific terms.

· If you substantially edit or curate outputs, your edits may be protected, but the underlying AI-generated content often is not. The European Union’s AI Act and the UK IPO’s guidance take similar stances. In other words, unless you are combining AI outputs with significant human creative input, you might not own anything enforceable.

What Happens When AI Companions Evolve?

Consider the scenario where your AI companion evolves so significantly through fine-tuning that it diverges from the original base model. This raises novel IP questions:

· Are you entitled to rights in the “delta,” – the difference between the original and adapted weights?

· If the platform later updates the base model, can you refuse migration to protect your companion’s unique personality?

· What if your training data included proprietary or personal content, are you licensing that to other users indirectly? These dilemmas are unresolved in most jurisdictions. No standardized framework governs “model drift” or co-ownership of evolved AI personalities.

The AI Companion as Brand and Identity

For many users, AI companions feel closer to personal brands or alter egos. This creates overlap with trademark law and persona rights. For example:

· Custom Avatars: If your bot’s voice, name, or appearance becomes associated with your online identity, it may have brand value distinct from the platform’s generic offering.

· Personality Rights: In some regions, you could argue that an AI trained on your style or likeness is an extension of your persona. Some users also train their AI companions specifically for intimate or adult-themed interactions, sometimes called AI sex chat. These scenarios can amplify legal and ethical complexities. If your companion’s persona evolves around sensitive or erotic content, questions arise: Who holds the rights to those interactions? Does the platform reserve the ability to moderate or delete them? And if the AI’s “personality” was partly shaped by your private data, is it effectively co-owned? These are untested areas of law that will only become more relevant as personalization deepens.

Platforms have historically been cautious about acknowledging this. They fear that doing so could trigger additional liabilities. However, as companions become more individualized, the distinction between “licensed tool” and “co-created identity” is blurring fast.

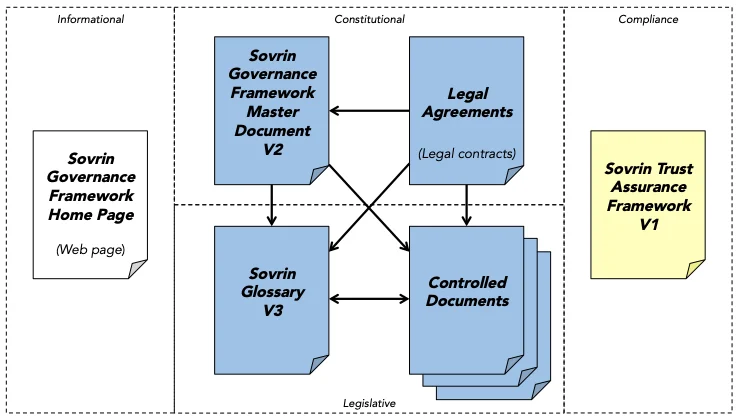

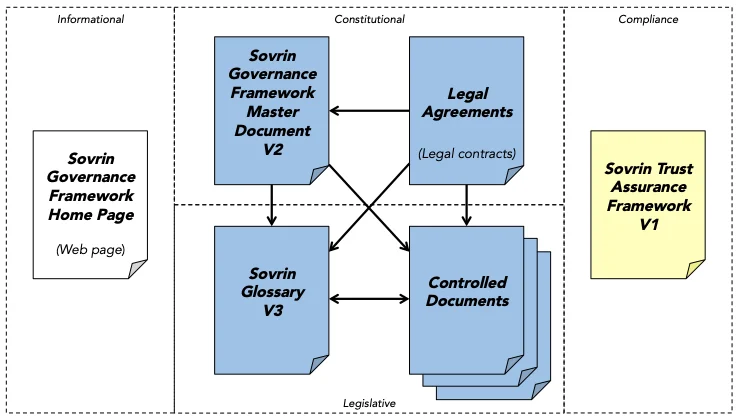

A Role for Blockchain in Verifiable Ownership Decentralized identity (DID) and blockchain attestations offer potential solutions:

· Provenance: Projects like Ceramic and KILT Protocol are developing methods to cryptographically link model states, training datasets, and outputs to specific user identities.

· Licensing Enforcement: Smart contracts can embed usage conditions directly into models. For example, allowing personal use but restricting commercial redistribution.

· Portable Companions: If an AI’s evolution history is on-chain, you can move your “trained personality” between platforms with transparent provenance. While early, these tools could eventually give users more leverage over their AI companions.

The AI Companion as a Service, and the Limits of Ownership

Most platforms today offer AI companions as a subscription service, not a product sale. This means you’re buying access, not ownership.

· If you stop paying, you lose access.

· If the company shutters, your companion may disappear.

· If policies change, you may lose control of stored data. This is why some developers prefer open-source models, even if the capabilities lag behind commercial offerings. Frameworks like GPT4All, OpenAssistant, and private LLMs give you more autonomy, but also more responsibility for maintenance and compliance.

Practical Tips for Users Who Care About Ownership

If you want to preserve as much control as possible:

1. Choose platforms with clear data export options.

2. Verify what licenses apply to your outputs and trained models.

3. Consider decentralized hosting or self-hosting if practical.

4. Use blockchain-based attestations to document training provenance.

5. Be aware that personality rights and brand value may evolve over time.

The Future: Shared Custody of Synthetic Minds

In the next few years, AI companions will likely feel more autonomous and irreplaceable. But their ownership will remain split across layers of IP, data, and platform infrastructure. The most realistic outcome is not total user ownership or platform monopolies. It’s a hybrid model, where rights are distributed between:

· Model creators

· Hosting providers

· Individual trainers

· Regulatory authorities

And yes, maybe you, the end user.

Think of it as shared custody of a synthetic mind. Not yours alone, but not quite anyone else’s either.

This article is not intended as financial advice. Educational purposes only.

Midle Collaborates with CryplexAI, Redefining Decentralized AI Network

Midle furthers its network expansion with innovative projects, linking community to rewarding opport...

Anchorage Digital Launches JitoSOL Support, Bringing Regulated Liquid Staking to Solana

Anchorage Digital enables direct JitoSOL staking for Solana ($SOL) holders via the only U.S. federal...

Ripple Breaks Ties with Linqto Amid DOJ Investigation and Bankruptcy Fears

Ripple denies any business link with Linqto as DOJ investigates the platform's operations and invest...